Small language models (SLMs) can perform better than large language models (LLMs). This counterintuitive idea applies to many engineering applications because many artificial intelligence (AI) applications don’t require an LLM. We often assume that more information technology capacity is better for search, data analytics and digital transformation.

SLMs offer numerous advantages for small, specialized AI applications, such as digital transformation. LLMs are more effective for large, general-purpose AI applications.

Let’s compare SLMs to LLMs for digital transformation projects. To read the first article in this series, click here.

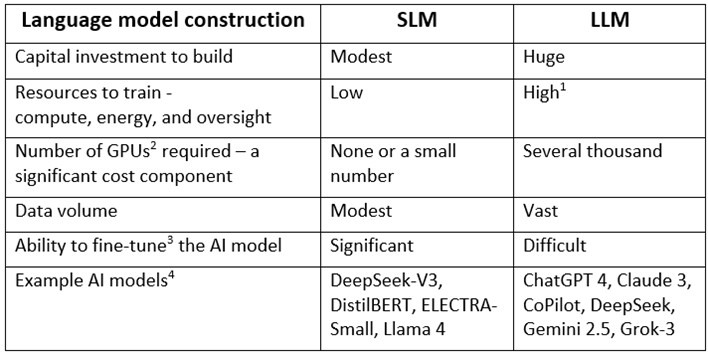

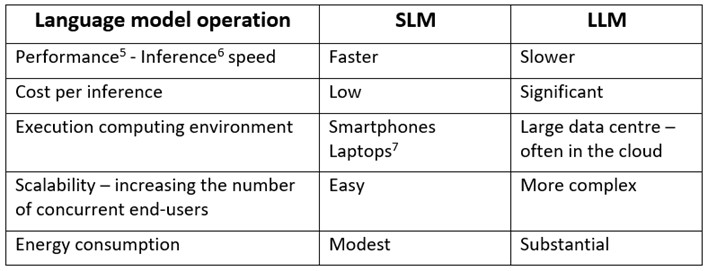

Language model construction and operation

SLMs are much cheaper to construct than LLMs because they build a model from much less data. This lower cost of SLMs makes them particularly attractive for digital transformation projects.

SLMs are much cheaper to operate and perform faster than LLMs because they need to process much less data volume to create inferences.

Impediments to implementing an SLM

What’s keeping organizations from implementing an SLM for a digital transformation project?

- Poor internal digital data quality and accessibility.

- Insufficient subject matter expertise to curate the specialized data required for the contemplated SLM.

- Too much unstructured paper data.

- Insufficient AI technical skills.

- Uncertain business case for the digital transformation project.

- Immature AI tools and vendor solutions.

- Immature project management practices.

How will SLMs and LLMs evolve?

The most likely trends for the foreseeable future of SLMs and LLMs include:

- Increasing numbers of organizations will use both SLMs and LLMs as the benefits of AI applications become clearer and more organizations acquire the skills to implement and operate the applications.

- Both SLMs and LLMs will grow in size and sophistication as software improves and data quality increases.

- Both SLMs and LLMs will improve in performance as software for inference processing improves and incorporates reasoning.

- The training costs for SLMs and LLMs will decrease as training algorithms are optimized.

- The limits on the number of words in a prompt will increase.

- Integration of AI models with enterprise applications will become more widespread.

- Hosting SLM-based AI applications internally will appeal to more organizations as the price point is achievable and because it mitigates the risk of losing control over proprietary information.

- Hosting an LLM internally will remain too costly and unnecessary when the organization has published and is enforcing an AI usage policy, as described in this article: Why You Need a Generative AI Policy.

- The clear distinction between SLMs and LLMs will blur as medium language models (MLMs) or small Large Language Models (sLLMs) are built and deployed.

- LLMs will reduce hallucinations8 by fact-checking external sources and providing references for inferences.

SLMs offer numerous advantages for digital transformation projects, as these projects often utilize domain-specific data. LLMs are more effective for large, general-purpose AI applications that require vast data volumes.

Footnotes

- This high consumption of resources is driving the many announcements about building large, new data centers and related electricity generation capacity. Training an LLM can cost more than $10 million each time.

- GPU stands for Graphics Processing Unit. GPU chips are particularly well-suited for the types of calculations that AI models perform in great quantity.

- Fine-tuning is a manual process with automated support where a trained AI model is further refined to improve its accuracy and performance.

- Selection criteria for choosing an AI model are described in this article: Making smarter AI choices.

- Performance is also called latency. In either case, it refers to the elapsed time from when the end-user completes the prompt, and the AI application output appears on the monitor.

- Inference is the term that refers to the process that the AI model performs to generate text in response to the prompt it receives.

- Sometimes called edge devices or edge computing.

The post How small language models can advance digital transformation – part 2 appeared first on Engineering.com.